That copy is missing the chapters. Here they are:

00:00:00 - The NVIDIA AI GPU Black Market

00:06:06 - WE NEED YOUR HELP

00:07:41 - A BIG ADVENTURE

00:10:10 - Ignored by the US

00:11:46 - BACKGROUND: Why They're Banned

00:16:04 - TIMELINE

00:21:32 - H20 15 Percent Revenue Share with the US

00:26:01 - Calculating BANNED GPUs

00:29:31 - OUR INFORMANTS

00:31:47 - THE SMUGGLING PIPELINE

00:33:39 - PART 1: HONG KONG Demand Drivers

00:43:14 - PART 1: How Do Suppliers Get the GPUs?

00:48:18 - PART 1: GPU Rich and GPU Poor

00:56:19 - PART 1: DATACENTER with Banned GPUs, AMD, Intel

01:06:19 - PART 1: Chinese Military, Huawei GPUs

01:09:48 - PART 1: How China Circumvents the Ban

01:19:30 - PART 1: GPU MARKET in Hong Kong

01:32:39 - WIRING MONEY TO CHINA

01:36:29 - PART 2: CHINA Smuggling Process

01:43:26 - PART 3: SHENZHEN's GPU MIDDLEMEN

01:50:22 - PART 3: AMD and INTEL GPUs Unwanted

01:56:34 - PART 4: THE GPU FENCE

02:06:01 - PART 4: FINDING the GPUs

02:15:12 - PART 4: THE FIXER IC Supplier

02:21:12 - PART 5: GPU WAREHOUSE

02:27:17 - PART 6: CHOP SHOP and REPAIR

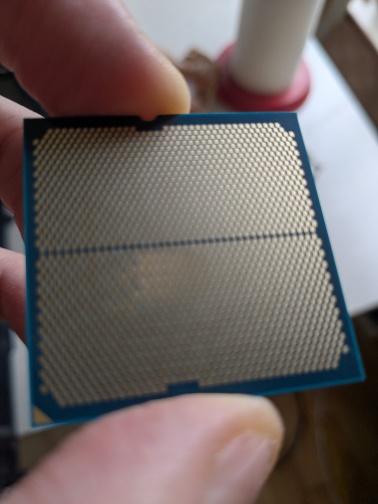

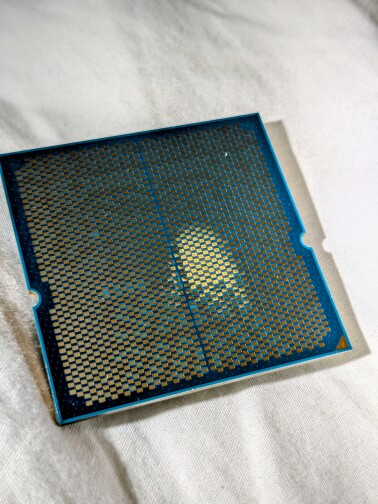

02:34:52 - PART 6: BUILD a Custom AI GPU

02:56:33 - PART 7: FACTORY

03:01:01 - PART 8: TAIWAN and SINGAPORE Intermediaries

03:02:06 - PART 9: SMUGGLER

03:05:11 - LEGALITY of Buying and Selling

03:08:05 - CORRUPTION: NVIDIA and Governments

03:26:51 - SIGNOFF