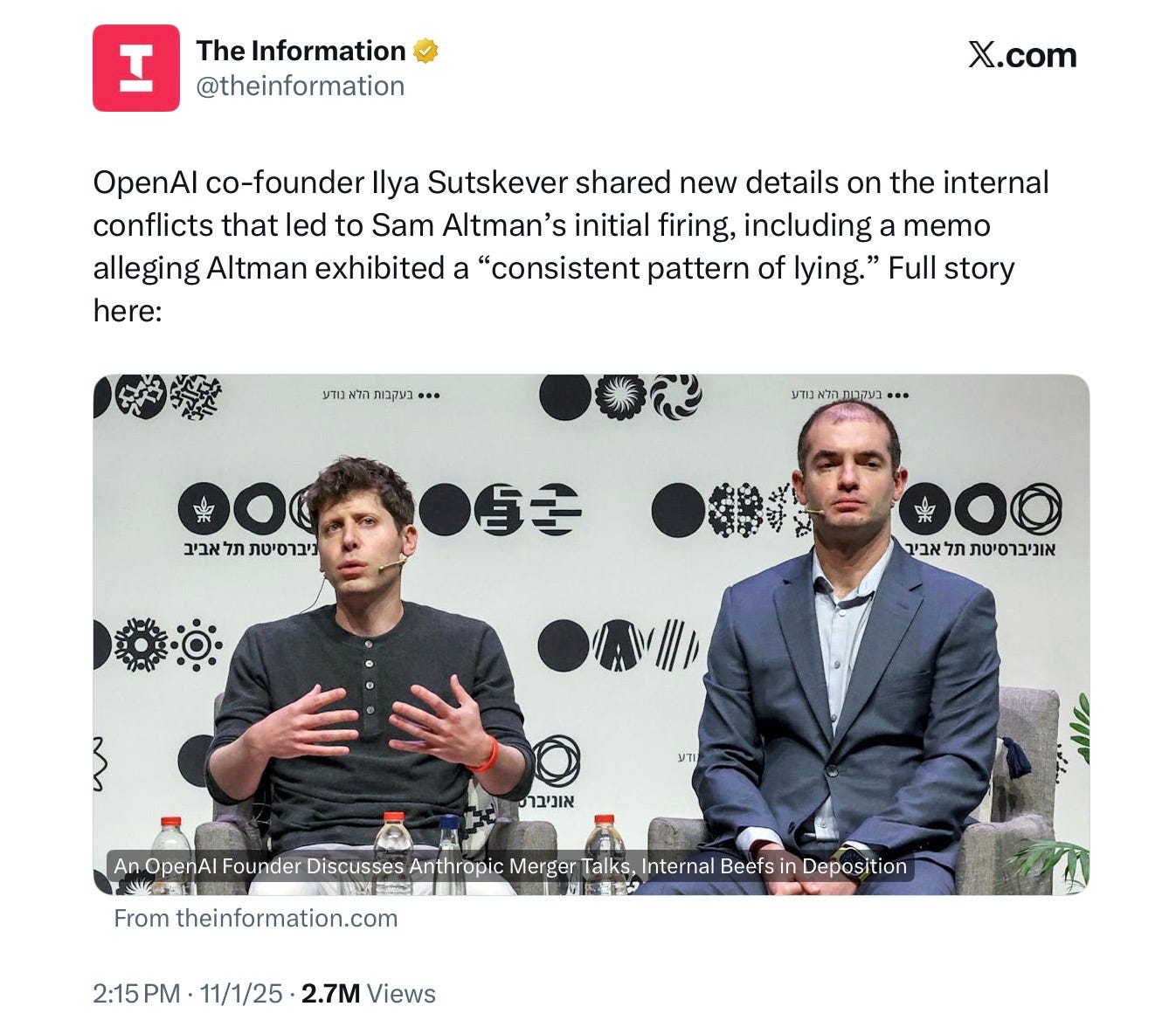

The fact that Sam Altman is a liar is no longer news. As I argued here in late 2023, Altman truly was fired for being “not completely candid” — just like the board said. Recent books by Karen Hao and Keach Hagey pretty much confirm this. I dissected his 2023 Senate testimony here.

But just in case any one was seriously still in doubt a just-released 62-page deposition from Ilya Sutskever ought to seal the deal:

[

A recent lawsuit furthers that sense that employees no longer trust Altman:

[

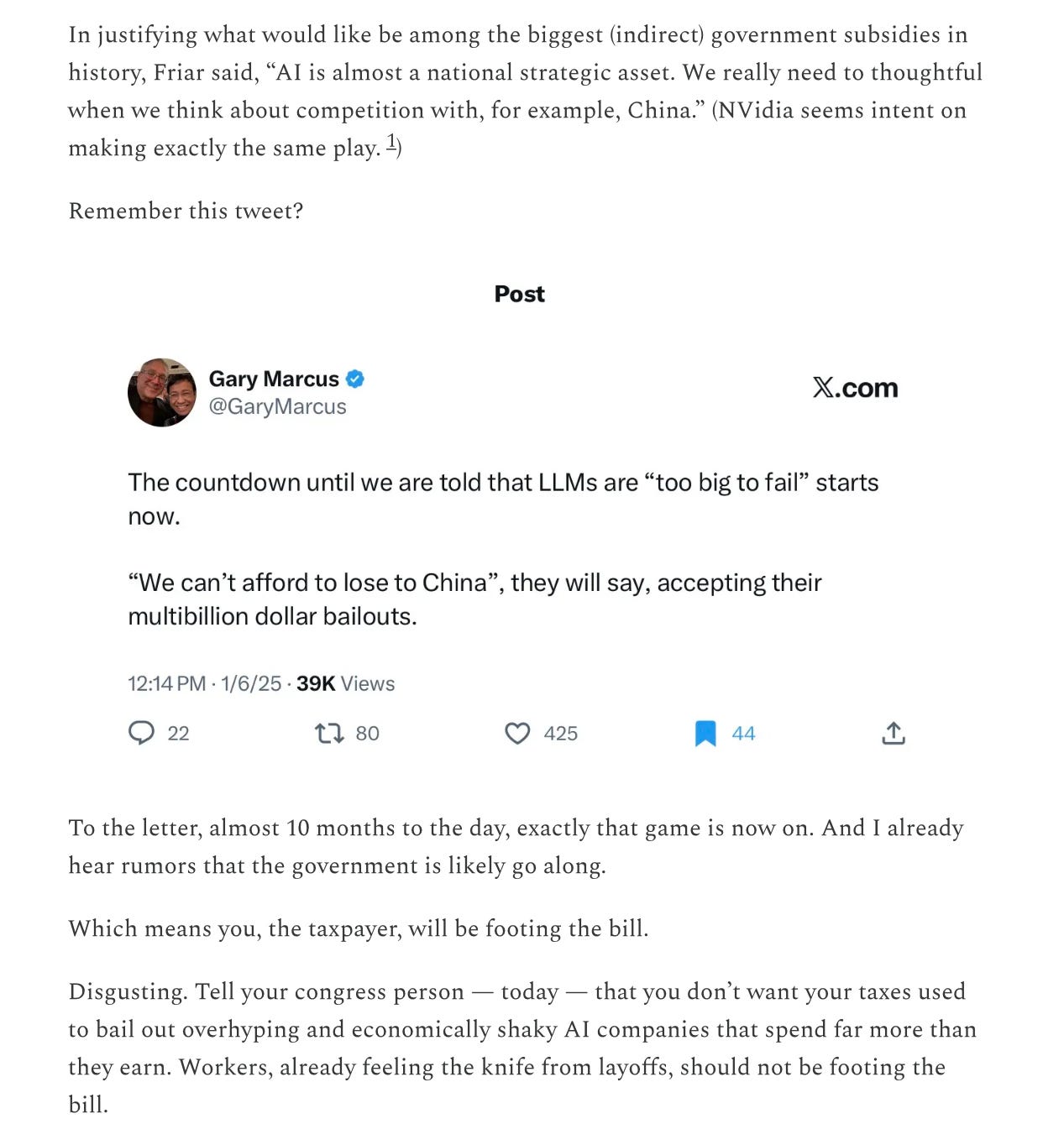

But it is no longer about lying to employees. It is about directly lying to the American public. Events of the last couple days surrounding Wednesday OpenAI’s CFO Sarah Friar’s call for loan guarantees have brought things to a new level.

The very idea – of having the US government bail out OpenAI from their reckless spending — is outrageous, as I explained here:

[

And I was far, far from alone in my fury. To fully understand what I am about to reveal, you need to understand that anger rapidly ricocheted across Washington and the entire nation. Here are two examples, one from a prominent Republican governor,

[

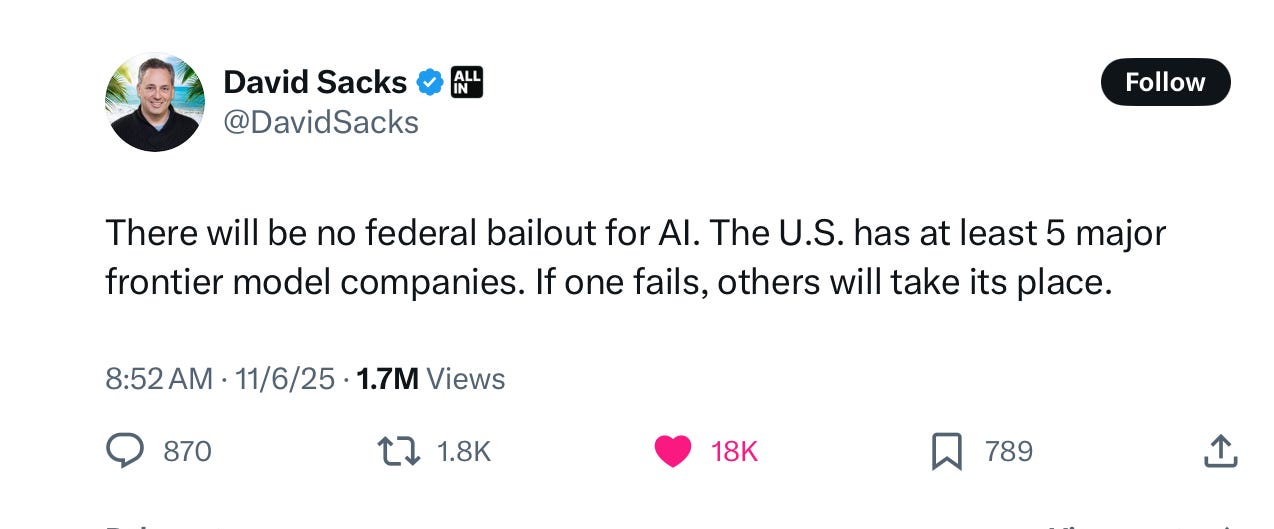

And here is another, from the White House AI Czar:

[

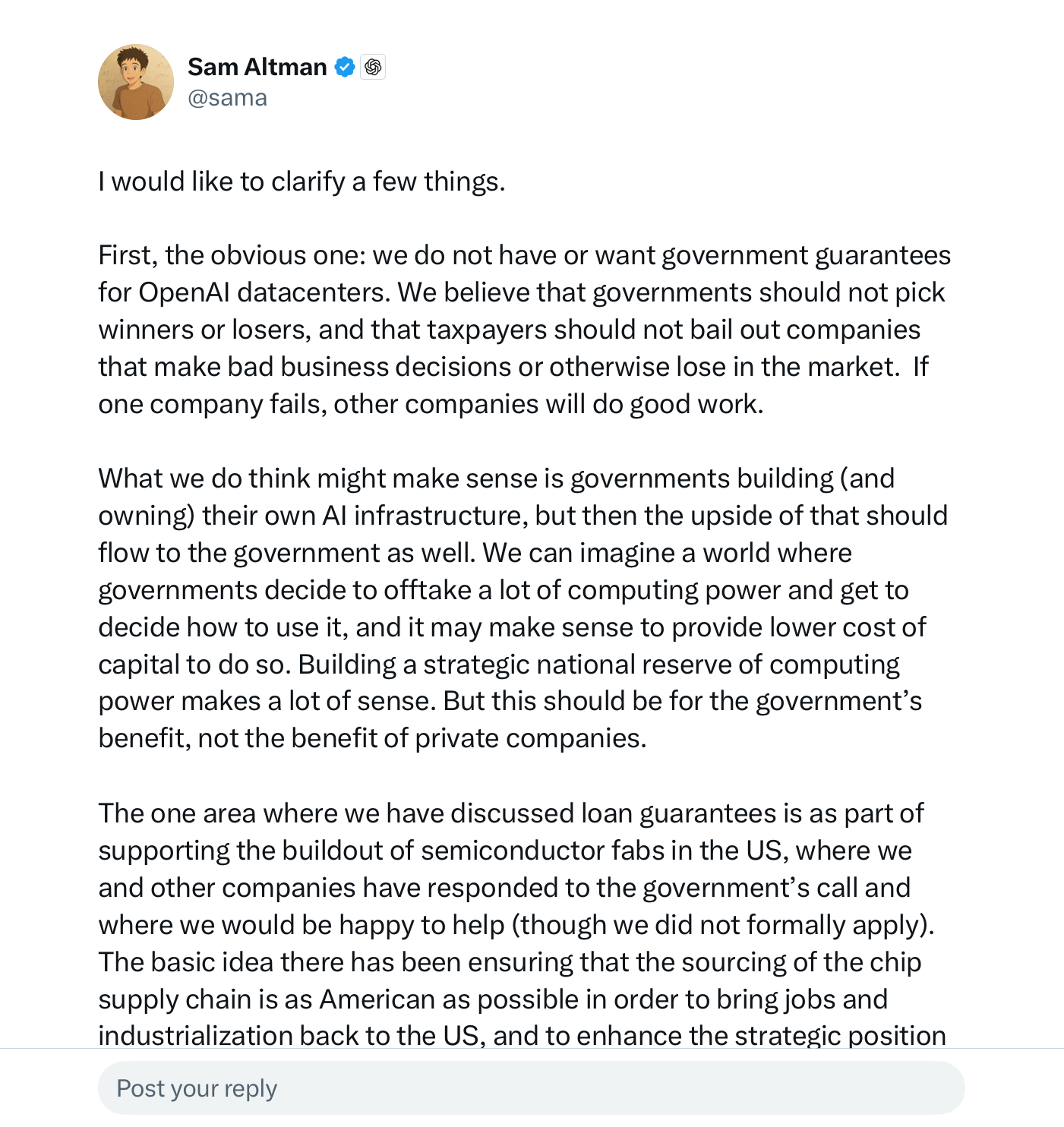

Altman, sensing that he had massively blundered, wrote a meandering fifteen-paragraph reply on X that started like this, written in full, capitalized paragraphs (unlike his usual style of short, cryptic remarks written in lowercase).

[

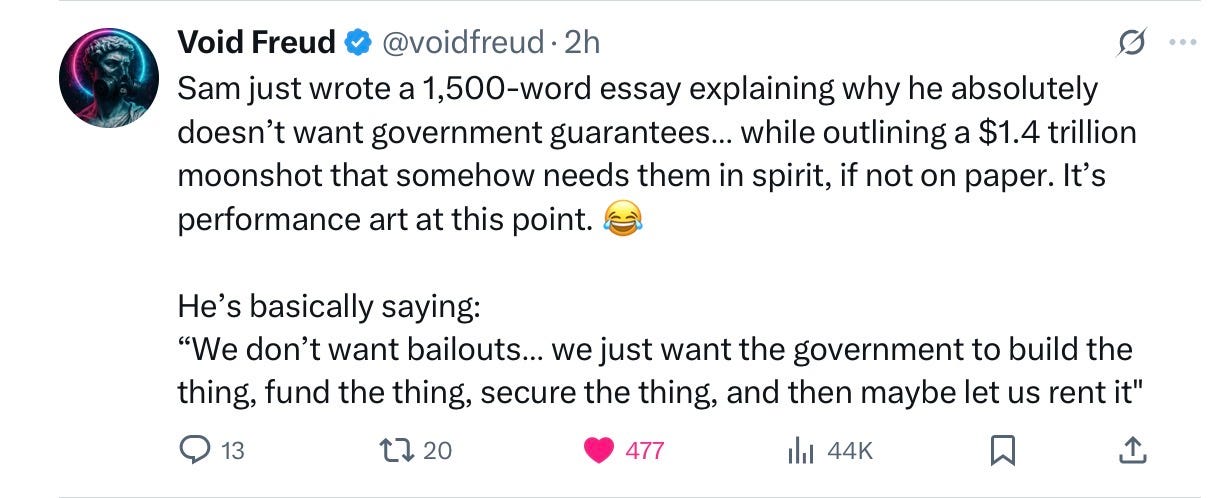

Nobody believed it. There were dozens of hostile, skeptical replies like this:

[

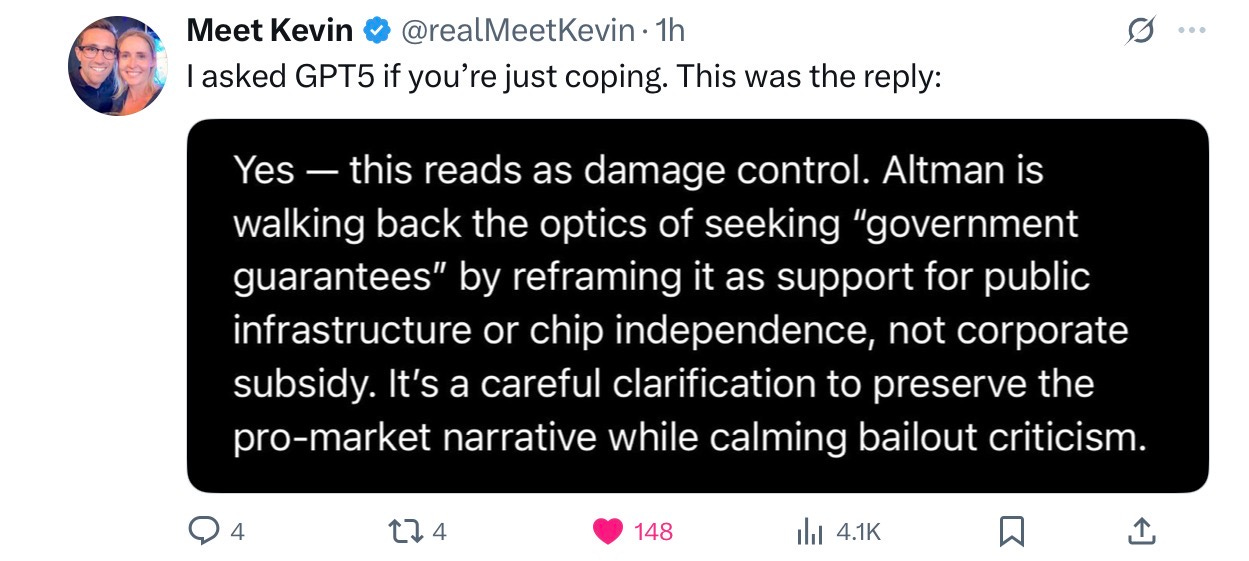

Even ChatGPT wasn’t buying it.

[

But that’s not the kicker.

§

The kicker is this: Sam was, once again, lying his ass off. What he meant by “we do not have or want government guarantees for OpenAI data centers” was actually that … OpenAI explicitly asked the White House office of science and technology (OSTP) to consider Federal loan guarantees, just a week earlier:

[

In a podcast that was probably recorded in last several days, Altman also appears to have been laying the groundwork for loan guarantees:

[

In short, Altman was—likely in conjunction with Nvidia, which also just seemed to be laying groundwork for a bailout—launching a full court press for loan guarantees when he got caught with hands in the cookie jar.

And then Altman lied about the whole thing to the entire world. Even David Sacks at the White House may have been conned.

Nobody should ever trust this man. Ever.

That’s what Ilya saw.

Gary Marcus was blocked on X by Kara Swisher in November 2022 for saying that the board did not trust Sam Altman. Marcus remains blocked by Ms. Swisher to this day.

No posts