(Image credit: European Souther Observatory)

Most of the alien civilizations that ever dotted our galaxy have probably killed themselves off already.

That's the takeaway of a new study, published Dec. 14 to the arXiv database, which used modern astronomy and statistical modeling to map the emergence and death of intelligent life in time and space across the Milky Way. Their results amount to a more precise 2020 update of a famous equation that Search for Extraterrestrial Intelligence founder Frank Drake wrote in 1961. The Drake equation, popularized by physicist Carl Sagan in his "Cosmos" miniseries, relied on a number of mystery variables — like the prevalence of planets in the universe, then an open question.

This new paper, authored by three Caltech physicists and one high school student, is much more practical. It says where and when life is most likely to occur in the Milky Way, and identifies the most important factor affecting its prevalence: intelligent creatures' tendency toward self-annihilation.

The authors looked at a range of factors presumed to influence the development of intelligent life, such as the prevalence of sunlike stars harboring Earth-like planets; the frequency of deadly, radiation-blasting supernovas; the probability of and time necessary for intelligent life to evolve if conditions are right; and the possible tendency of advanced civilizations to destroy themselves.

Related: 9 strange, scientific excuses for why humans haven't found aliens yet

Get the world’s most fascinating discoveries delivered straight to your inbox.

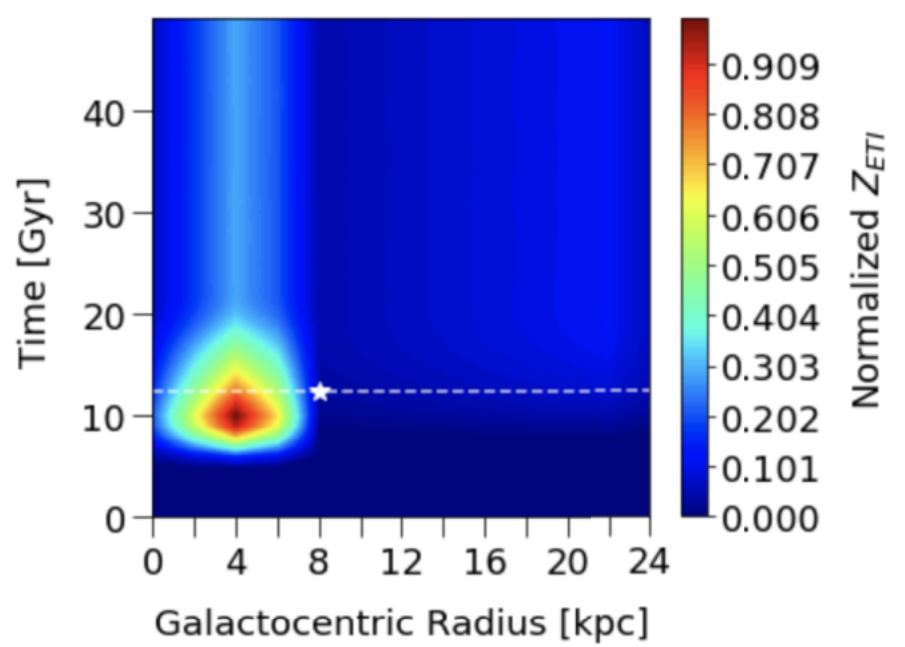

Modeling the evolution of the Milky Way over time with those factors in mind, they found that the probability of life emerging based on known factors peaked about 13,000 light-years from the galactic center and 8 billion years after the galaxy formed. Earth, by comparison, is about 25,000 light-years from the galactic center, and human civilization arose on the planet's surface about 13.5 billion years after the Milky Way formed (though simple life emerged soon after the planet formed.)

In other words, we're likely a frontier civilization in terms of galactic geography and relative latecomers to the self-aware Milky Way inhabitant scene. But, assuming life does arise reasonably often and eventually becomes intelligent, there are probably other civilizations out there — mostly clustered around that 13,000-light-year band, mostly due to the prevalence of sunlike stars there.

A figure from the paper plots the age of the Milky Way in billions of years (y axis) against distance from the galactic center (x axis), finding a hotspot for civilization 8 billion years after the galaxy formed and 13,000 light years from the galactic center. (Image credit: Cai et al.)

Most of these other civilizations that still exist in the galaxy today are likely young, due to the probability that intelligent life is fairly likely to eradicate itself over long timescales. Even if the galaxy reached its civilizational peak more than 5 billion years ago, most of the civilizations that were around then have likely self-annihilated, the researchers found .

This last bit is the most uncertain variable in the paper; how often do civilizations kill themselves? But it's also the most important in determining how widespread civilization is, the researchers found. Even an extraordinarily low chance of a given civilization wiping itself out in any given century — say, via nuclear holocaust or runaway climate change — would mean that the overwhelming majority of peak Milky Way civilizations are already gone.

The paper has been submitted to a journal for publication and is awaiting peer review.

Originally published on Live Science.

Rafi joined Live Science in 2017. He has a bachelor's degree in journalism from Northwestern University’s Medill School of journalism. You can find his past science reporting at Inverse, Business Insider and Popular Science, and his past photojournalism on the Flash90 wire service and in the pages of The Courier Post of southern New Jersey.