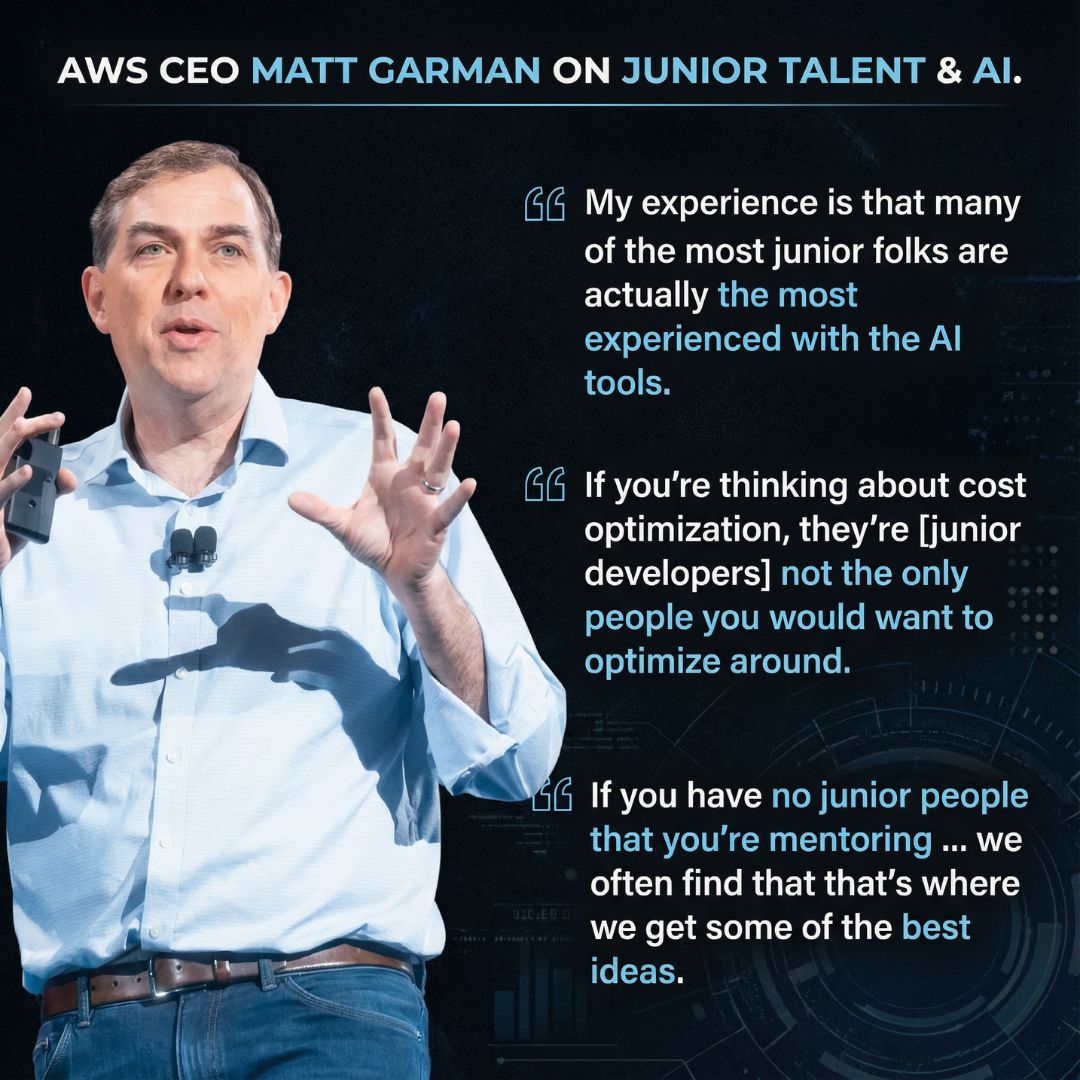

AWS CEO Matt Garman outlined 3 solid reasons why companies should not focus on cutting junior developer roles, noting that they “are actually the most experienced with the AI tools”.

3 Reasons AI Should Not Replace Junior Developers

In a tech world obsessed with AI replacing human workers, Matt Garman, CEO of Amazon Web Services (AWS), is pushing back against one of the industry’s most popular cost-cutting ideas.

Speaking on WIRED’s The Big Interview podcast, Garman has a bold message for companies racing to cut costs with AI.

He was asked to explain why he once called replacing junior employees with AI “one of the dumbest ideas” he’d ever heard, and to expand on how he believes agentic AI will actually change the workplace in the coming years.

1) Junior Devs Often Know AI Tools Better

First, junior employees are often better with AI tools than senior staff.

“Number one, my experience is that many of the most junior folks are actually the most experienced with the AI tools. So they're actually most able to get the most out of them.”

Fresh grads have grown up with new technology, so they can adapt quickly. Many of them learn AI-powered tools while studying or during internships. They tend to explore new features, find quick methods to write code, and figure out how to get the best results from AI agents.

According to the 2025 Stack Overflow Developer Survey, 55.5% of early-career developers reported using AI tools daily in their development process, higher than for the experienced folks.

This comfort with new tools allows them to work more efficiently. In contrast, senior developers have established workflows and may take more time to adopt. Recent research shows that over half of Gen Z employees are actually helping senior colleagues upskill in AI.

2) Junior Developers Shouldn’t Be The Default Cost-Saving Move

Second, junior staff are usually the least expensive employees.

“Number two, they're usually the least expensive because they're right out of college, and they generally make less. So if you're thinking about cost optimization, they're not the only people you would want to optimize around.”

Junior employees usually get much less in salary and benefits, so removing them does not deliver huge savings. If a company is trying to save money, it doesn’t make that much financial sense.

So, when companies talk about increasing profit margins, junior employees should not be the default or only target. True optimization, Real cost-cutting means looking at the whole company because there are plenty of other places where expenses can be trimmed.

In fact, 30% of companies that laid off workers expecting savings ended up increasing expenses, and many had to rehire later.

3) Removing Juniors Breaks the Talent Pipeline

Third, companies need fresh talent.

“Three, at some point, that whole thing explodes on itself. If you have no talent pipeline that you're building and no junior people that you're mentoring and bringing up through the company, we often find that that's where we get some of the best ideas.”

Think of a company like a sports team. If you only keep veteran players and never recruit rookies, what happens when those veterans retire? You are left with no one who knows how to play the game.

Also, hiring people straight out of college brings new ways of thinking into the workplace. They have fresh ideas shaped by the latest trends, motivation to innovate.

More importantly, they form the foundation of a company’s future workforce. If a company decides to stop hiring junior employees altogether, it cuts off its own talent pipeline. Over time, that leads to fewer leaders to promote from within.

A Deloitte report also notes that the tech workforce is expected to grow at roughly twice the rate of the overall U.S. workforce, highlighting the demand for tech talent. Without a strong pipeline of junior developers coming in, companies might face a tech talent shortage.

When there are not enough junior hires being trained today, teams struggle to fill roles tomorrow, especially as projects scale.

Bottom Line

This isn’t just corporate talk. As the leader of one of the world’s largest cloud computing platforms, serving everyone from Netflix to the U.S. intelligence agencies, Garman has a front-row seat to how companies are actually using AI.

And what he is seeing makes him worried that short-term thinking could damage businesses for years to come. Garman’s point is grounded in long-term strategy. A company that relies solely on AI to handle tasks without training new talent could find itself short of people.

Still, Garman admits the next few years will be bumpy. “Y_our job is going to change_,” he said. He believes AI will make companies more productive as well as the employees.

When technology makes something easier, people want more of it. AI enables the creation of software faster, allowing companies to develop more products, enter new markets, and serve more customers.

Developers will be responsible for more than just writing code, with faster adaptation to new technologies becoming essential. But he has a hopeful message in the end.

That’s why Geoffrey Hinton has advised that Computer Science degrees remain essential. This directly supports Matt Garman’s point. Fresh talent with a strong understanding of core fundamentals becomes crucial for filling these higher-value roles of the future.

“I’m very confident in the medium to longer term that AI will definitely create more jobs than it removes at first,” Garman said.