Fluorite is the first console-grade game engine fully integrated with Flutter.

Its reduced complexity by allowing you to write your game code directly in Dart, and using all of its great developer tools. By using a FluoriteView widget you can add multiple simultaneous views of your 3D scene, as well as share state between game Entities and UI widgets - the Flutter way!

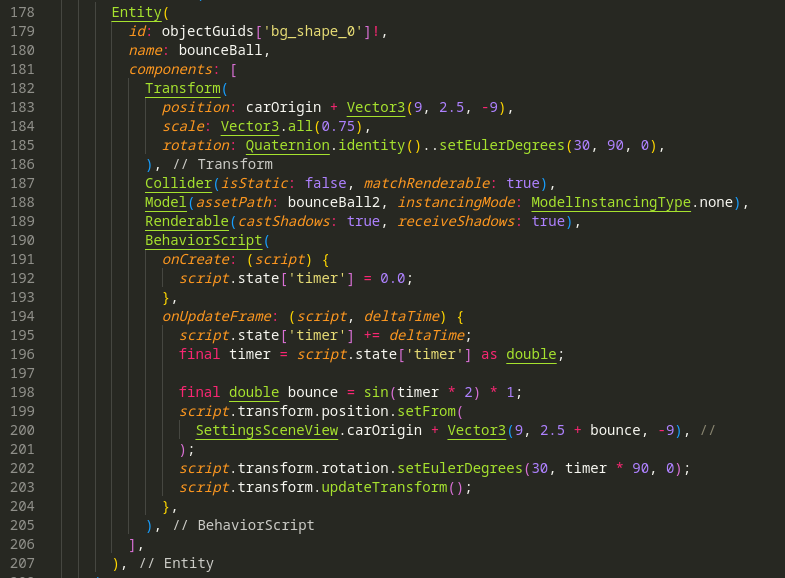

High-performance ECS core

At the heart of Fluorite lies a data-oriented ECS (Entity-Component-System) architecture. It's written in C++ to allow for maximum performance and targeted optimizations, yielding great performance on lower-end/embedded hardware. At the same time, it allows you to write game code using familiar high-level game APIs in Dart, making most of your game development knowledge transferrable from other engines.

Your browser does not support the video tag.

Model-defined touch trigger zones

This feature enables 3D Artists to define “clickable” zones directly in Blender, and to configure them to trigger specific events! Developers can then listen to onClick events with the specified tags to trigger all sorts of interactions! This simplifies the process of creating spatial 3D UI, enabling users to engage with objects and controls in a more intuitive way.

Your browser does not support the video tag.

Console-grade 3D Rendering

Powered by Google's Filament renderer, Fluorite leverages modern graphics APIs such as Vulkan to deliver stunning, hardware-accelerated visuals comparable to those found on gaming consoles. With support for physically-accurate lighting and assets, post-processing effects, and custom shaders, the developers can create visually rich and captivating environments.

Your browser does not support the video tag.

Hot Reload

Thanks to its Flutter/Dart integration, Fluorite's scenes are enabled for Hot Reload! This allows developers to update their scenes and see the changes within just a couple frames. This significantly speeds up the development process, enabling rapid iteration and testing of game mechanics, assets, and code.

More coming soon...